k8s工作负载

k8s工作负载

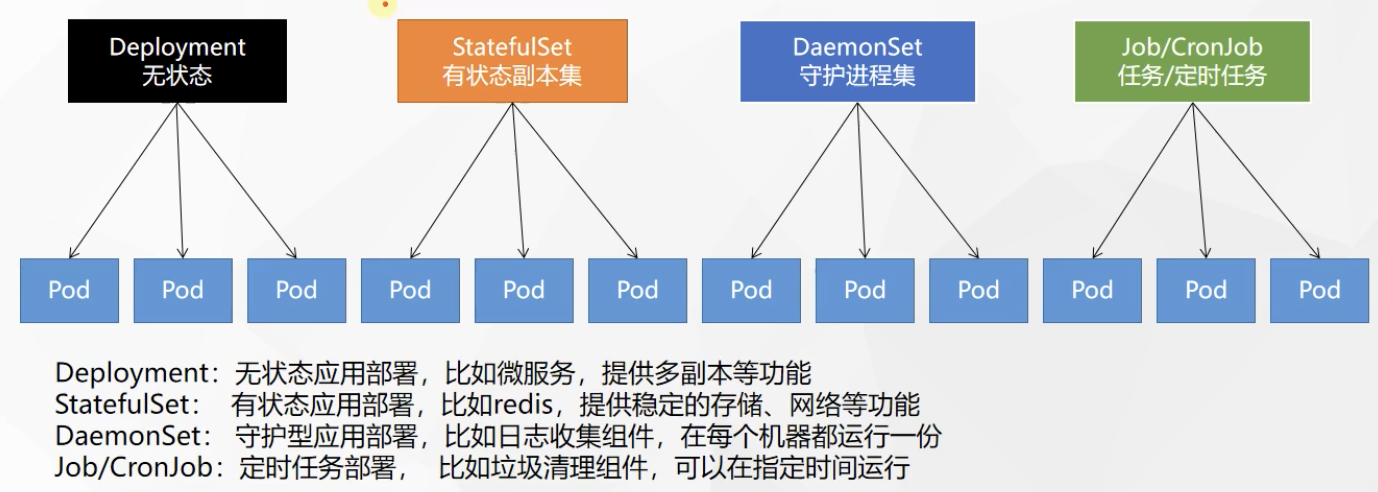

# 〇. k8s 工作负载

pod(豆荚):pod是k8s集群中最小的资源单位, 所有的工作负载都是为了控制pod

- doc : https://kubernetes.io/zh/docs/concepts/workloads/controllers/

- zh:https://kubernetes.io/zh-cn/docs/concepts/workloads/

- Deployment

- 无状态应用部署, 比如微服务, 提供多副本等功能, 应用回退或者故障迁移当前数据丢失,ip也可能会丢失

- StatefulSet

- 有状态应用部署, 比如redis,database等, 提供稳定的存储和网络功能, 回退或者故障迁移数据完全备份

- DamonSet

- 守护型应用部署, 比如日志收集组件, 每个机器都运行一份,有且只有一份

- Job/CronJob

- 定时任务部署

# 一. Pod

doc: https://kubernetes.io/zh-cn/docs/concepts/workloads/pods/#pods-and-controllers

Pod(豆荚): 可以类比docker 的container, pod 是在docker 容器的上层封装

运行中的一组(docker)容器,Pod是kubernetes中应用的最小单位.

pod 之间资源隔离, 同一个pod之间共享存储,网络资源(ip 和 端口唯一,可以理解为同一个pod就是一台虚拟机).

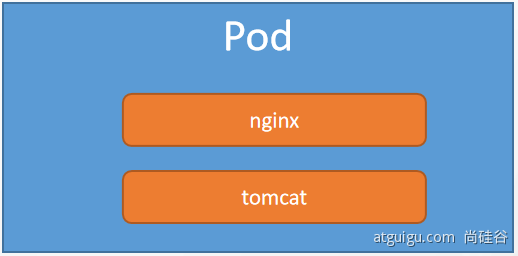

pod可以封装多个服务(docker 容器)

每一个pod k8s都会分配一个ip

kubectl get pod -o wide查看有些 Pod 具有 Init 容器和 应用容器。 Init 容器会在启动应用容器之前运行并完成。

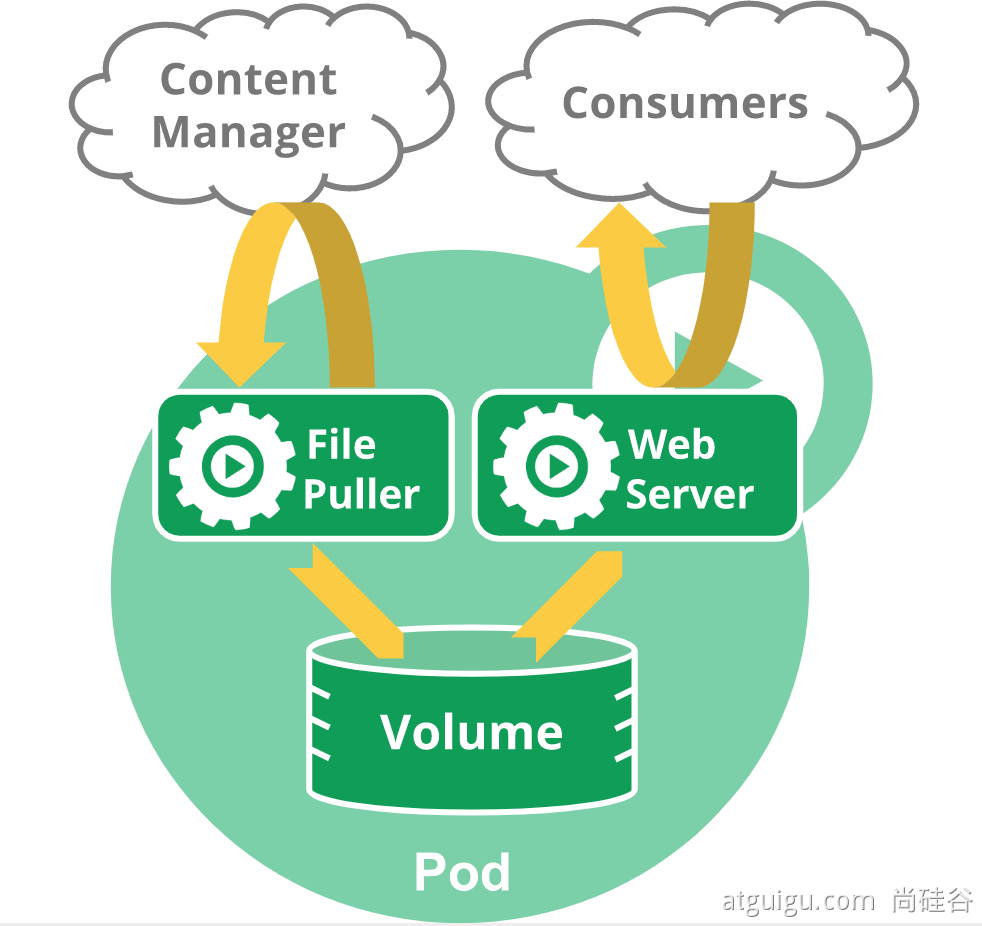

Pod 天生地为其成员容器提供了两种共享资源:网络和存储。

# 1.1 pod 作用

- 用于管理 pod 的工作负载资源

- 运行单个容器的 Pod。"每个 Pod 一个容器" 模型是最常见的 Kubernetes 用例; 在这种情况下,可以将 Pod 看作单个容器的包装器,并且 Kubernetes 直接管理 Pod,而不是容器

- 运行多个协同工作的容器的 Pod。 Pod 可能封装由多个紧密耦合且需要共享资源的共处容器组成的应用程序。 这些位于同一位置的容器可能形成单个内聚的服务单元 —— 一个容器将文件从共享卷提供给公众, 而另一个单独的 “边车”(sidecar)容器则刷新或更新这些文件。 Pod 将这些容器和存储资源打包为一个可管理的实体

- 将多个并置、同管的容器组织到一个 Pod 中是一种相对高级的使用场景。 只有在一些场景中,容器之间紧密关联时你才应该使用这种模式。

- 每个 Pod 都旨在运行给定应用程序的单个实例。如果希望横向扩展应用程序 (例如,运行多个实例以提供更多的资源),则应该使用多个 Pod,每个实例使用一个 Pod。 在 Kubernetes 中,这通常被称为副本(Replication)。 通常使用一种工作负载资源及其控制器来创建和管理一组 Pod 副本。

- Pod 天生地为其成员容器提供了两种共享资源:网络和存储。

# 1.2 创建pod

命令行创建

kubectl run mynginx --image=nginx # 创建pod name: mynginx 指定镜像为nginx kubectl get pod -n default # 查看 pod 信息 -n 指定命名空间默认是default -A查看全部命名空间的POD kubectl describe pod mynginx # 查看 mynginx pod描述信息 # 查看default名称空间的Pod kubectl get pod kubectl get pod -n default kubectl get pod -n default -w # 实时监控 # pod描述信息 kubectl describe pod Pod名字 # 删除pod kubectl delete pod Pod1名字 Pod2名字 -n default # -n 指定命名空间默认是default kubectl delete pod --all -n default # 清除所有的pod # 查看Pod的运行日志 kubectl logs Pod名字 # 每个Pod - k8s都会分配一个ip kubectl get pod -o wide # 使用Pod的ip+pod里面运行容器的端口 curl 192.168.169.136 # 进入pod kubectl exec -it mynginx(Pod名字) -- /bin/bash exit # 退出pod # 集群中的任意一个机器以及任意的应用都能通过Pod分配的ip来访问这个Pod # 实时监控 watch -n 1 kubectl get pod # -n 1 每隔1s刷新1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29配置文件创建

apiVersion: v1 kind: Pod metadata: labels: run: mynginx name: mynginx # namespace: default spec: containers: - image: nginx name: nginx - image: tomcat:8.5.68 # 指定多个容器 name: tomcat1

2

3

4

5

6

7

8

9

10

11

12

13

dashboard 创建

kubectl apply -f xxx.yml

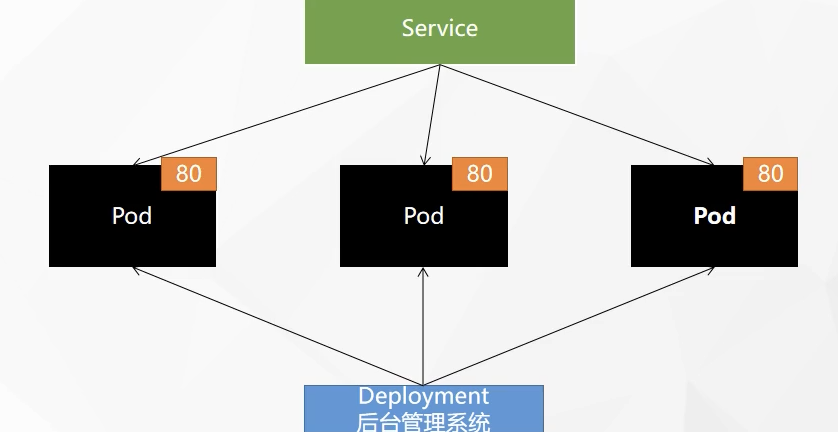

# 二. Deployment

推荐使用deployment

Deployment (部署): 控制Pod,使Pod拥有多副本,自愈,扩缩容等能力

Deployment 是无状态的资源类型, 版本回退或者扩缩容自愈等之前的数据会丢失,

创建一个 deployment 应用部署时, 该应用部署会自动创建一个名字为 xxname-xxxx 的pod. 删除该pod的时候deployment会自动再创建一个pod, 这就是deployment的自愈能力

Deployment常用命令

# 创建 deployment kubectl create deployment mytomcat --image=tomcat:8.5.68 # 删除 deployment kubectl delete deployment mytomcat # 删除deployment 后所有的资源pod都会被删除 # 多副本创建deployment kubectl create deployment my-dep --image=nginx --replicas=3 # 3副本replicas1

2

3

4

5

6

# 2.1 自愈

创建一个 deployment 应用部署时, 该应用部署会自动创建一个名字为 xxname-xxxx 的pod. 删除该pod的时候deployment会自动再创建一个pod, 这就是deployment的自愈能力

test 测试

# 清除所有Pod,比较下面两个命令有何不同效果? kubectl run mynginx --image=nginx kubectl create deployment mytomcat --image=tomcat:8.5.68 # 创建一个deployment应用部署, 该应用部署会自动创建一个名字为 mytomcat-xxxx 的pod. # 效果展示 kubectl delete pod mynginx -n default # 删除后不再存在该pod kubectl delete pod mytomcat-6f5f895f4f-gdd4w -n default # 删除后deployment会自动再创建一个名字为 mytomcat-xxxx 的pod.1

2

3

4

5

6

7

8

# 2.2 多副本

同一个服务创建多个副本

命令行

kubectl create deployment my-dep --image=nginx --replicas=3 watch -n 1 kubectl get pod # 监控pod状态1

2

3yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: app: my-dep name: my-dep spec: replicas: 3 selector: matchLabels: app: my-dep template: metadata: labels: app: my-dep spec: containers: - image: nginx name: nginx1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

# 2.3 自动扩容/缩容

scale: 规模

流量高峰可以自动增加pod或者检查pod提高并发能力

扩容

# 方式1 kubectl scale deployment/my-dep --replicas=5 # 扩容名字为my-dep的deployment 副本为5 # 方式2 kubectl edit deployment/my-dep # 修改deployment/my-dep配置文件中的 replicas 为51

2

3

4缩容

# 方式1 kubectl scale deployment/my-dep --replicas=2 # 缩容名字为my-dep的deployment 副本为2 # 方式2 kubectl edit deployment/my-dep # 修改deployment/my-dep配置文件中的 replicas 为21

2

3

4

dashboard 中也可以同通过缩放完成扩缩容

# 2.4 故障转移

- 当pod发生停机/删除Pod/容器崩溃 等故障时

- 自愈: k8s尝试重启pod, 叫自愈

- 故障转移: 发生不可重启pod时, 比如机器断电等, k8s会在其他机器上拉起一份新的pod 叫故障转移

# 2.5 滚动升级

滚动升级(Rolling update)就是指每次更新部分Pod, 一个service可能有多个pod, 从v1升级到v2版本时, k8s首先会启动v2版本的pod, 每成功启动一个v2pod时, 停止一个v1pod, 将一部分流量指向v2pod. 直到所有pod更新成功

- 优点: 不停机更新

- 缺点: 会有数据版本不一致的情况

命令

# 滚动升级 kubectl set image deployment/my-dep nginx=nginx:1.16.1 --record # --record 记录本次更新 # 版本回退 kubectl rollout status deployment/my-dep1

2

3

4修改yaml文件

kubectl edit deployment/my-dep1

# 2.6 版本回退

根据滚动升级的版本进行滚动版本回退

Deployment 是无状态的, 版本回退或者扩缩容自愈等之前的数据会丢失

命令

#历史记录 kubectl rollout history deployment/my-dep #查看某个历史详情 kubectl rollout history deployment/my-dep --revision=2 #回滚(回到上次) kubectl rollout undo deployment/my-dep #回滚(回到指定版本) kubectl rollout undo deployment/my-dep --to-revision=21

2

3

4

5

6

7

8

9

10

11

# 2.7 小结

- 除了Deployment,k8s还有

StatefulSet、DaemonSet、Job等 类型资源。我们都称为工作负载。 - 有状态应用使用

StatefulSet部署,无状态应用使用Deployment部署 - https://kubernetes.io/zh/docs/concepts/workloads/controllers/

# 三. StatefulSet

- StatefulSet: 有状态应用部署, 比如redis,database等, 提供稳定的存储和网络功能, 回退或者故障迁移数据完全备份

- StatefulSet本质上是Deployment的一种变体,在v1.9版本中已成为GA版本,它为了解决有状态服务的问题,它所管理的Pod拥有固定的Pod名称,启停顺序,在StatefulSet中,Pod名字称为网络标识(hostname),还必须要用到共享存储。

- 在Deployment中,与之对应的服务是service,而在StatefulSet中与之对应的headless service,headless service,即无头服务,与service的区别就是它没有Cluster IP,解析它的名称时将返回该Headless Service对应的全部Pod的Endpoint列表。

- 除此之外,StatefulSet在Headless Service的基础上又为StatefulSet控制的每个Pod副本创建了一个DNS域名,这个域名的格式为:

$(podname).(headless server name) FQDN:$(podname).(headless server name).namespace.svc.cluster.local

特点

Pod一致性:包含次序(启动、停止次序)、网络一致性。此一致性与Pod相关,与被调度到哪个node节点无关;

稳定的次序:对于N个副本的StatefulSet,每个Pod都在[0,N)的范围内分配一个数字序号,且是唯一的;

稳定的网络:Pod的 hostname 模式为 (statefulset名称)−(序号);

稳定的存储:通过VolumeClaimTemplate为每个Pod创建一个PV。删除、减少副本,不会删除相关的卷。

组成部分:

- Headless Service:用来定义Pod网络标识( DNS domain);

- volumeClaimTemplates :存储卷申请模板,创建PVC,指定pvc名称大小,将自动创建pvc,且pvc必须由存储类供应;

- StatefulSet :定义具体应用,名为Nginx,有三个Pod副本,并为每个Pod定义了一个域名部署statefulset。

StatefulSet详解

- kubectl explain sts.spec :主要字段解释

- replicas :副本数

- selector:那个pod是由自己管理的

- serviceName:必须关联到一个无头服务商

- template:定义pod模板(其中定义关联那个存储卷)

- volumeClaimTemplates :生成PVC

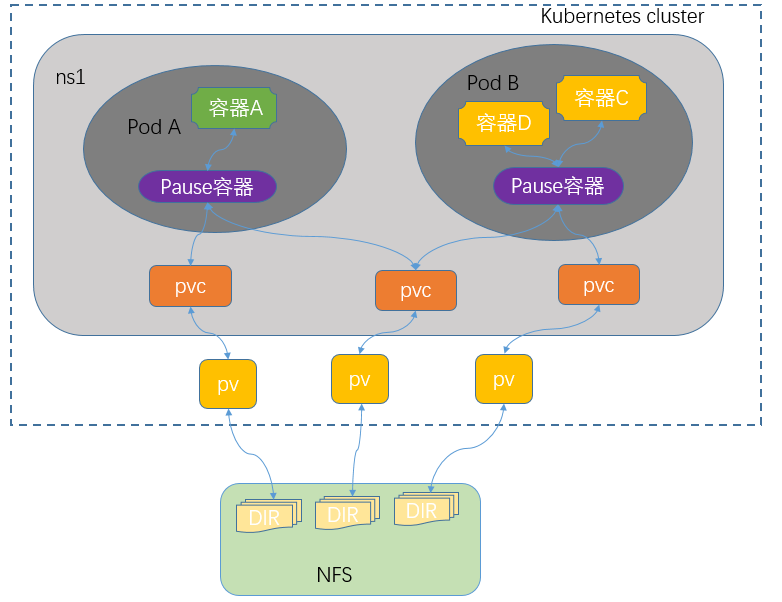

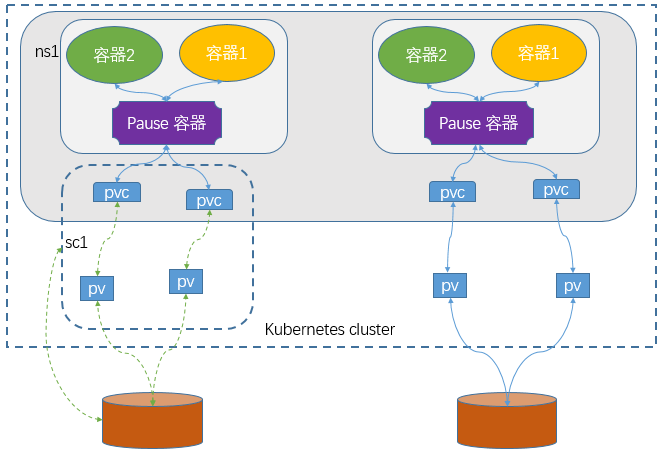

# 3.0 pv&pvc 和 sc

pv&pvc

PV:持久卷(Persistent Volume),将应用需要持久化的数据保存到指定位置 场地

PVC:持久卷申明(Persistent Volume Claim),申明需要使用的持久卷规格, 类比场地申请书

PV&PVC配合使用: pod中修改了数据卷中的数据, pv池中的数据也会更新

sc

SC是StorageClass的缩写,表示存储类;这种资源主要用来对pv资源的自动供给提供接口;所谓自动供给是指用户无需手动创建pv,而是在创建pvc时对应pv会由persistentVolume-controller自动创建并完成pv和pvc的绑定;

使用sc资源的前提是对应后端存储必须支持restfull类型接口的管理接口,并且pvc必须指定对应存储类名称来引用SC;简单讲SC资源就是用来为后端存储提供自动创建pv并关联对应pvc的接口;

# 3.1 思考

为什么需要 headless service 无头服务?

- Headless Services(无头服务)是一种特殊的service,当不需要

负载均衡以及Service IP时,可以通过指定Cluster IP(spec.clusterIP)的值为"None"来创建一个 无头服务(Headless Service)。- 在用Deployment时,每一个Pod名称是没有顺序的,是随机字符串,因此是Pod名称是无序的,但是在statefulset中要求必须是有序 ,每一个pod不能被随意取代,pod重建后pod名称还是一样的。而pod IP是变化的,所以是以Pod名称来识别。pod名称是pod唯一性的标识符,必须持久稳定有效。这时候要用到无头服务,它可以给每个Pod一个唯一的名称 。

为什么需要volumeClaimTemplate? 对于有状态的副本集都会用到持久存储,对于分布式系统来讲,它的最大特点是数据是不一样的,所以各个节点不能使用同一存储卷,每个节点有自已的专用存储,但是如果在Deployment中的Pod template里定义的存储卷,是所有副本集共用一个存储卷,数据是相同的,因为是基于模板来的 ,而statefulset中每个Pod都要自已的专有存储卷,所以statefulset的存储卷就不能再用Pod模板来创建了,于是statefulSet使用volumeClaimTemplate,称为卷申请模板,它会为每个Pod生成不同的pvc,并绑定pv,从而实现各pod有专用存储。这就是为什么要用volumeClaimTemplate的原因。

# 3.2 部署 StatefulSet 服务

# a. 创建命名空间

cat << EOF > nginx-ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: nginx-ss

EOF

# kubectl apply -f nginx-ns.yaml

2

3

4

5

6

7

8

# b. 基于sc创建动态存储

cat << EOF > nginx-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nginx-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false" # # When set to "false" your PVs will not be archived

# by the provisioner upon deletion of the PVC.

EOF

# kubectl apply -f nginx-sc.yaml

2

3

4

5

6

7

8

9

10

11

12

# c. 创建statefulset

cat << EOF > nginx-ss.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

namespace: nginx-ss

spec:

selector:

matchLabels:

app: nginx #必须匹配 .spec.template.metadata.labels

serviceName: "nginx" #声明它属于哪个Headless Service.

replicas: 3 #副本数

template:

metadata:

labels:

app: nginx # 必须配置 .spec.selector.matchLabels

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: www.my.com/web/nginx:v1

ports:

- containerPort: 80

name: web

volumeMounts:

- name: nginx-pvc

mountPath: /usr/share/nginx/html

volumeClaimTemplates: #可看作pvc的模板

- metadata:

name: nginx-pvc

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "nginx-nfs-storage" #存储类名,改为集群中已存在的

resources:

requests:

storage: 1Gi

EOF

# kubectl apply -f nginx-ss.yaml

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

# d. 观察pod的有序创建

kubectl get pod -n nginx-ss

# e. 创建 service(svc)

cat << EOF > nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: nginx-ss

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

EOF

# kubectl apply -f nginx-svc.yaml

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

- 每个 Pod 都拥有一个基于其顺序索引的稳定的主机名

- 删除pod后,即使pod的ip发生变化,但是依然不影响访问

# 3.3 扩容/缩容 StatefulSet

扩容/缩容StatefulSet: 指增加或减少它的副本数。这通过更新

replicas字段完成。你可以使用kubectl scale 或者kubectl patch来扩容/缩容一个 StatefulSet。

kubectl scale sts web --replicas=4 -n nginx-ss #扩容

kubectl scale sts web --replicas=2 -n nginx-ss #缩容

2

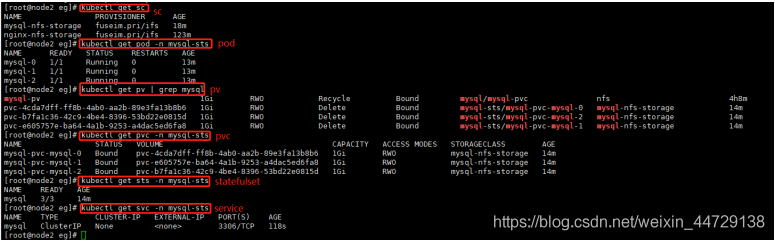

# 3.4 部署mysql

创建mysql的命名空间、sc

cat << EOF > mysql-ns.yaml apiVersion: v1 kind: Namespace metadata: name: mysql-sts EOF cat << EOF > mysql-sc.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: mysql-nfs-storage provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "false" # # When set to "false" your PVs will not be archived # by the provisioner upon deletion of the PVC. EOF kubectl apply -f mysql-ns.yaml kubectl apply -f mysql-sc.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24部署mysql的statefulset

cat << EOF > mysql-ss.yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: mysql namespace: mysql-sts spec: selector: matchLabels: app: mysql #必须匹配 .spec.template.metadata.labels serviceName: "mysql" #声明它属于哪个Headless Service. replicas: 3 #副本数 template: metadata: labels: app: mysql # 必须配置 .spec.selector.matchLabels spec: terminationGracePeriodSeconds: 10 containers: - name: mysql image: www.my.com/sys/mysql:5.7 ports: - containerPort: 3306 name: mysql env: - name: MYSQL_ROOT_PASSWORD value: "123456" volumeMounts: - name: mysql-pvc mountPath: /var/lib/mysql volumeClaimTemplates: #可看作pvc的模板 - metadata: name: mysql-pvc spec: accessModes: [ "ReadWriteOnce" ] storageClassName: "mysql-nfs-storage" #存储类名,改为集群中已存在的 resources: requests: storage: 1Gi EOF # kubectl apply -f mysql-ss.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45创建svc

cat << EOF > mysql-svc.yaml apiVersion: v1 kind: Service metadata: name: mysql namespace: mysql-sts labels: app: mysql spec: ports: - port: 3306 name: mysql clusterIP: None selector: app: mysql EOF # kubectl apply -f mysql-svc.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

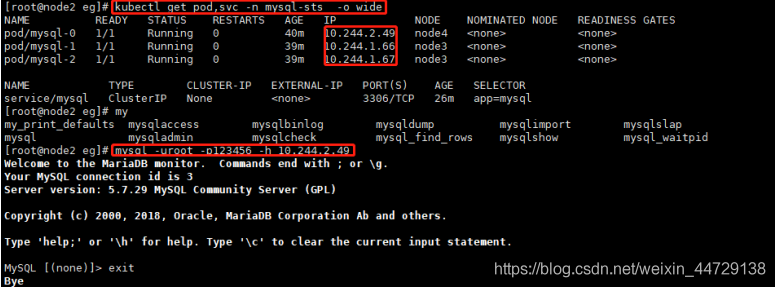

19查看 并 访问测试

- 查看

- 测试

编写mysql-read的svc

cat << EOF > mysql-svc-port.yaml apiVersion: v1 kind: Service metadata: name: mysql-read namespace: mysql-sts labels: app: mysql-2 spec: ports: - port: 3306 name: mysql selector: app: mysql-2 EOF # kubectl apply -f mysql-svc-port.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19- 让mysql-read可以通过外部访问, 使用NodePort作为访问方法, 执行命令

kubectl edit svc/mysql-read -n mysql-sts,进入修改界面,修改type为NodePort,同时给spec/ports中加入nodePort: 31988配置。 再次执行kubectl get svc -n mysql-sts - 在本地电脑通过mysql客户端工具登陆即可

- 让mysql-read可以通过外部访问, 使用NodePort作为访问方法, 执行命令

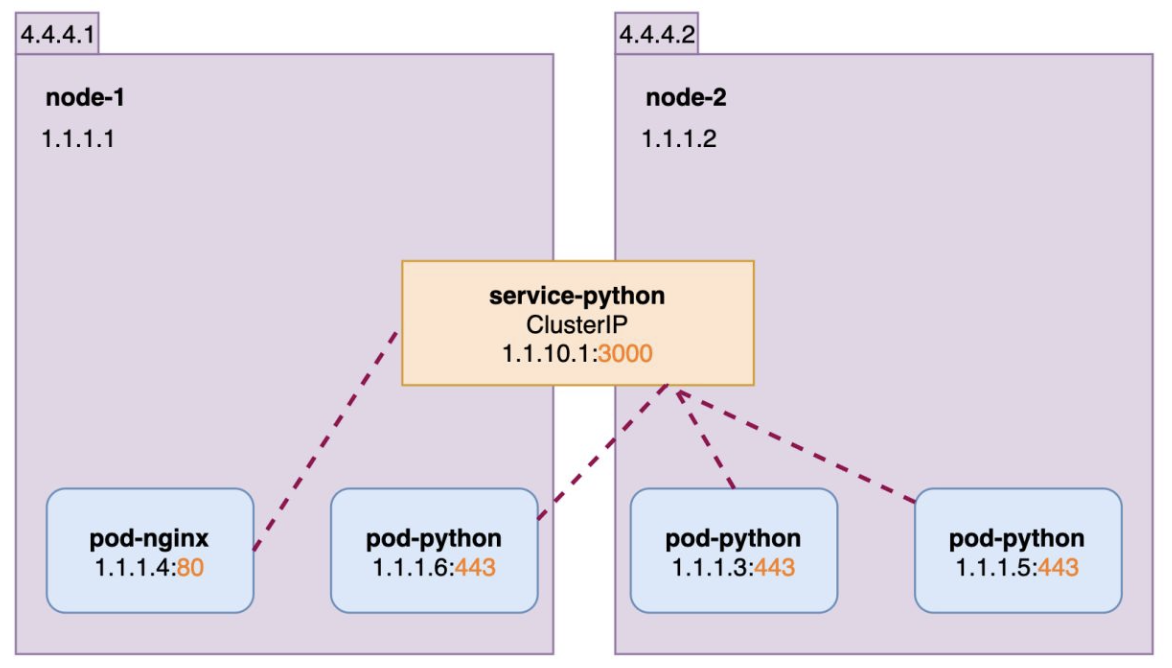

# 四. Service

- 服务: 将一组 pods 公开为网络服务的抽象方法。

- service 会暴露集群端口给前端应用访问

- 一个service 指向多个pod , 可以实现负载均衡的效果

- 带有同一标签的pod创建时会自动加入到service

- 删除service 时, 对应的deployment pod不会删除

命令暴露deployment

#暴露Deploy kubectl expose deployment my-dep --port=8000 --target-port=80 # 查看service kubectl get service #使用标签检索Pod kubectl get pod -l app=my-dep1

2

3

4

5

6

7

8yaml 创建service

apiVersion: v1 kind: Service metadata: labels: app: my-dep name: my-dep spec: selector: app: my-dep ports: - port: 8000 protocol: TCP targetPort: 801

2

3

4

5

6

7

8

9

10

11

12

13

# 4.1 ClusterIP

service 默认的type是ClusterIP

ClusterIP类型只能在集群的内部使用

创建的ClusterIp service访问:

在集群内部:

serviceIP:port 访问,

也可以通过 serviceName.serviceNameSpace.svc:port 访问(此方式只能在其他pod内部访问)

不能在外部访问

命令创建 type为clusterip的service

kubectl expose deployment my-dep --port=8000 --target-port=80 #或者 kubectl expose deployment my-dep --port=8000 --target-port=80 --type=ClusterIP1

2

3yaml创建type为ClusterIP的service

apiVersion: v1 kind: Service metadata: labels: app: my-dep name: my-dep spec: selector: app: my-dep ports: - port: 8000 protocol: TCP targetPort: 80 type: ClusterIP1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

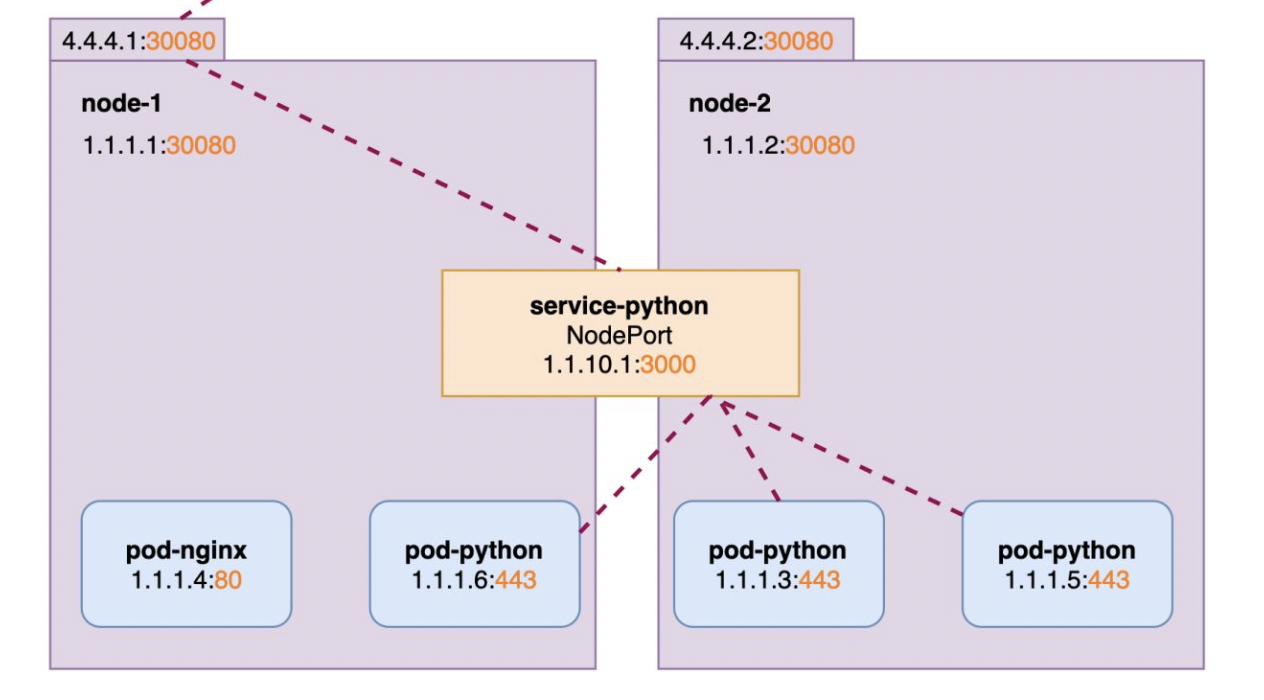

# 4.2 NodePort

NodePort 类型可以公共访问, 集群外部访问

NodePort范围在 30000-32767 之间, 会在每一台的集群机器上都创建一个相同的对外端口

创建的NodePort 类型service 访问方式:

- 在集群内部:

- serviceIP:port 访问,

- 也可以通过 serviceName.serviceNameSpace.svc:port 访问(此方式只能在其他pod内部访问)

- 在集群外部: 使用任意集群内的公网IP加端口都可以访问到

命令创建NodePort 模式

kubectl expose deployment my-dep --port=8000 --target-port=80 --type=NodePort1yaml创建NodePort 模式

apiVersion: v1 kind: Service metadata: labels: app: my-dep name: my-dep spec: ports: - port: 8000 protocol: TCP targetPort: 80 selector: app: my-dep type: NodePort1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

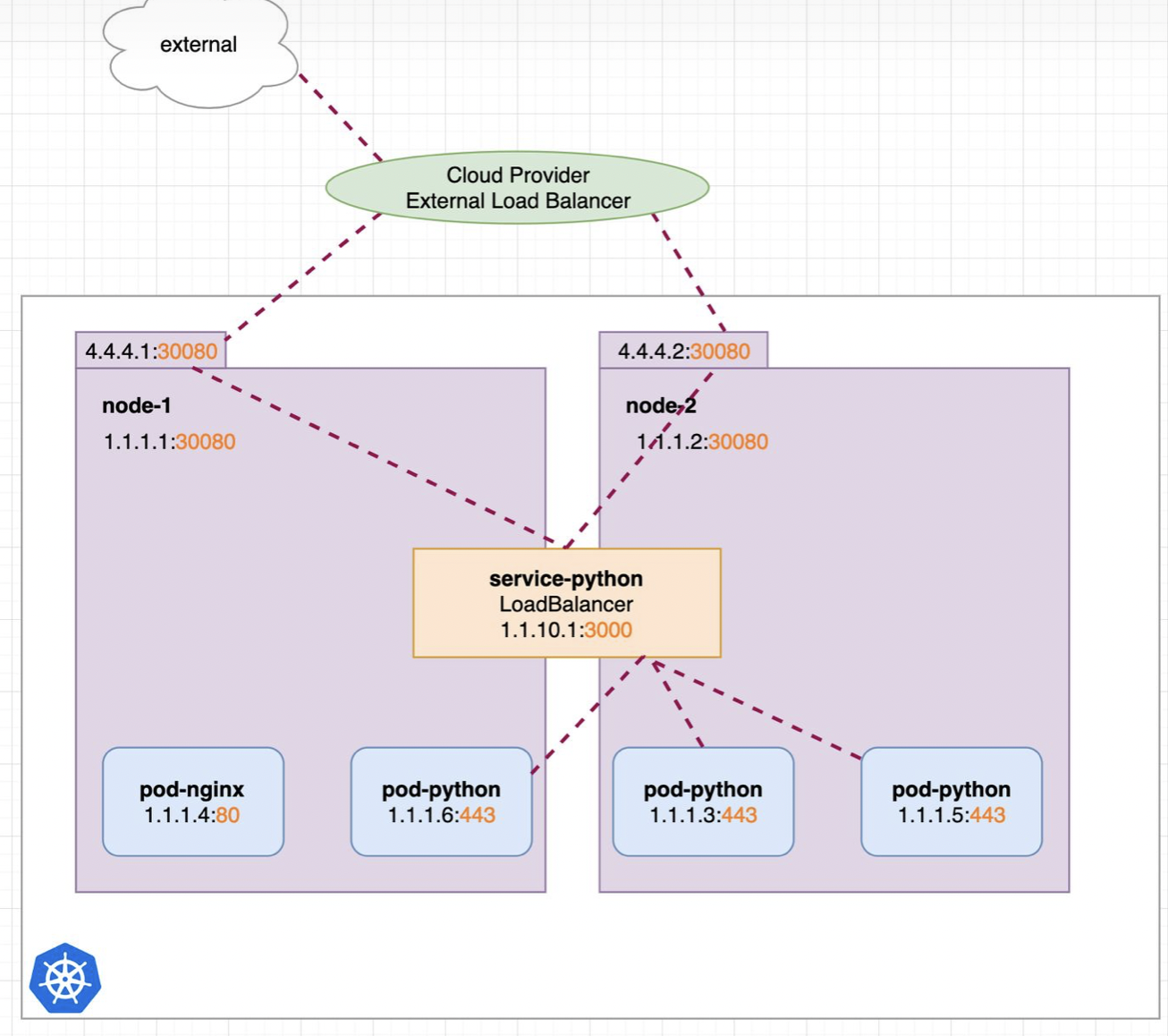

# 4.3 LoadBalancer

LoadBalancer 类型的service 是可以实现集群外部访问服务的另外一种解决方案。不过并不是所有的k8s集群都会支持,大多是在公有云托管集群中会支持该类型。负载均衡器是异步创建的,关于被提供的负载均衡器的信息将会通过

Service的status.loadBalancer字段被发布出去。

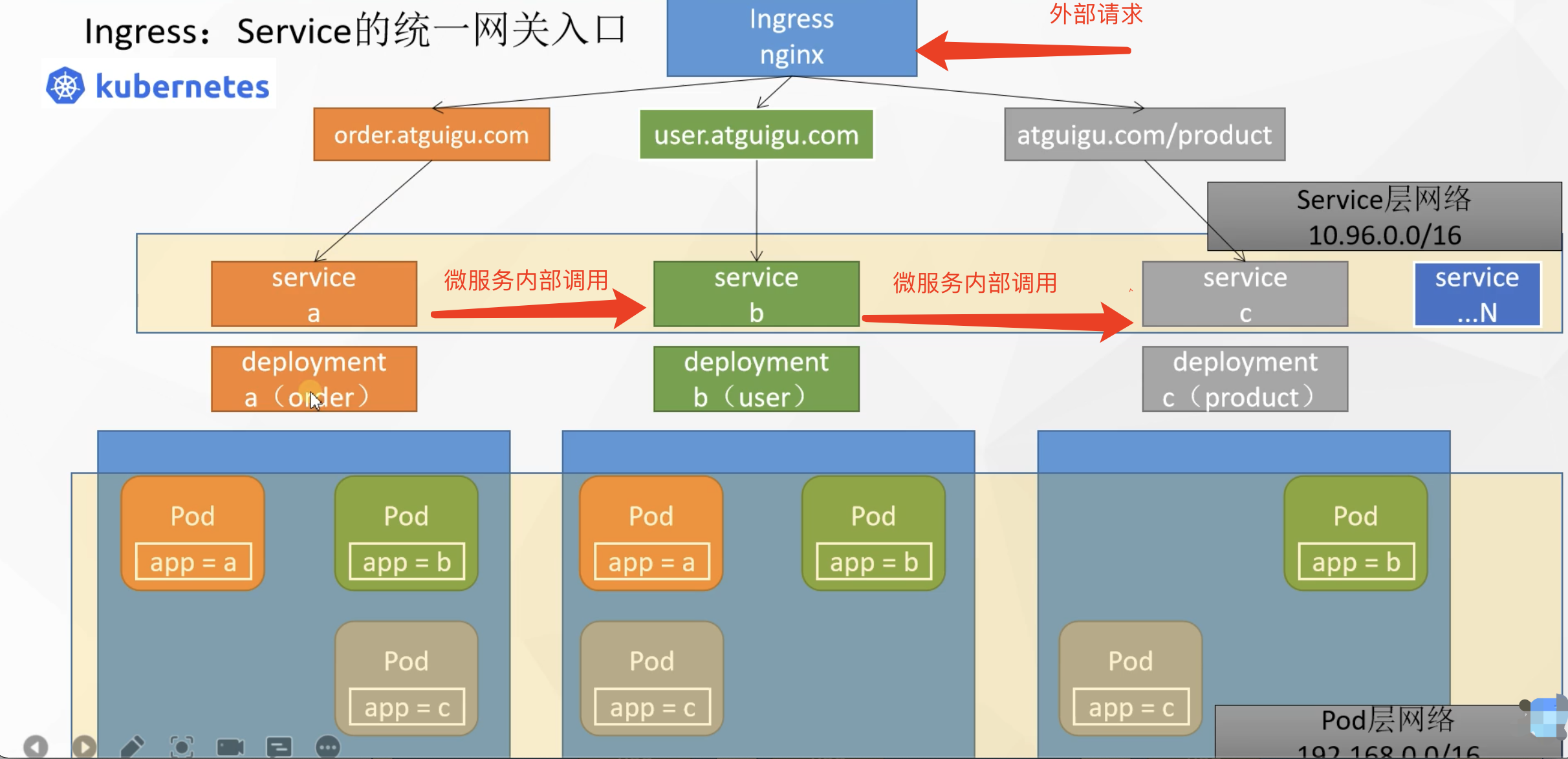

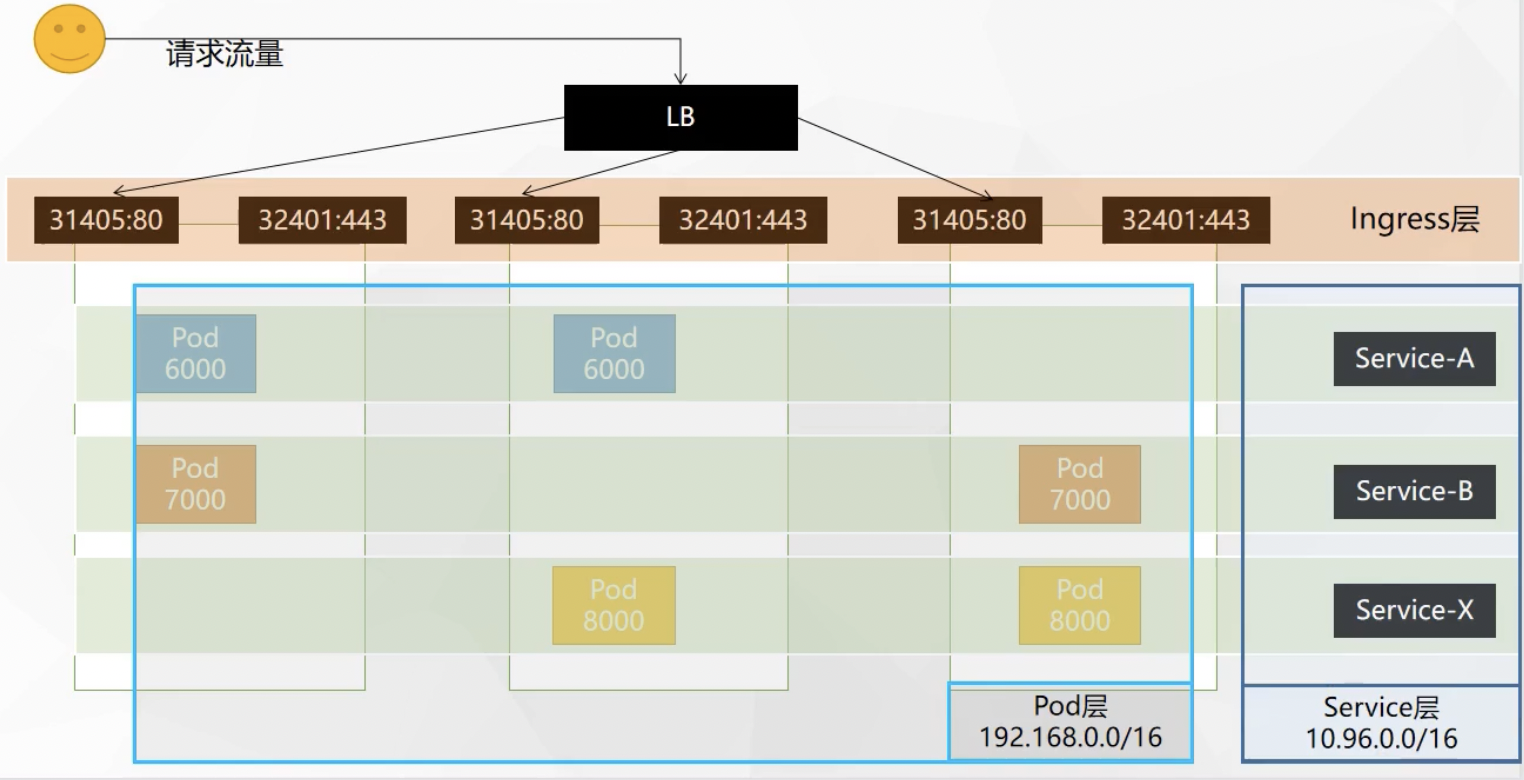

# 五. Ingress

Ingress(入口): Service 的统一网关入口

- 底层是nginx 做的反向代理

- 官网地址:https://kubernetes.github.io/ingress-nginx/

- k8s网络模型

# 5.1 安装Ingress

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml

#修改镜像

vi deploy.yaml

#将image的值改为如下值:

registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0

# 检查安装的结果

kubectl get pod,svc -n ingress-nginx

# 最后别忘记把svc暴露的端口要放行

2

3

4

5

6

7

8

9

10

11

修改后的deploy.yaml

apiVersion: v1 kind: Namespace metadata: name: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx --- # Source: ingress-nginx/templates/controller-serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx automountServiceAccountToken: true --- # Source: ingress-nginx/templates/controller-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx data: --- # Source: ingress-nginx/templates/clusterrole.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm name: ingress-nginx rules: - apiGroups: - '' resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - '' resources: - nodes verbs: - get - apiGroups: - '' resources: - services verbs: - get - list - watch - apiGroups: - extensions - networking.k8s.io # k8s 1.14+ resources: - ingresses verbs: - get - list - watch - apiGroups: - '' resources: - events verbs: - create - patch - apiGroups: - extensions - networking.k8s.io # k8s 1.14+ resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io # k8s 1.14+ resources: - ingressclasses verbs: - get - list - watch --- # Source: ingress-nginx/templates/clusterrolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm name: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- # Source: ingress-nginx/templates/controller-role.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx rules: - apiGroups: - '' resources: - namespaces verbs: - get - apiGroups: - '' resources: - configmaps - pods - secrets - endpoints verbs: - get - list - watch - apiGroups: - '' resources: - services verbs: - get - list - watch - apiGroups: - extensions - networking.k8s.io # k8s 1.14+ resources: - ingresses verbs: - get - list - watch - apiGroups: - extensions - networking.k8s.io # k8s 1.14+ resources: - ingresses/status verbs: - update - apiGroups: - networking.k8s.io # k8s 1.14+ resources: - ingressclasses verbs: - get - list - watch - apiGroups: - '' resources: - configmaps resourceNames: - ingress-controller-leader-nginx verbs: - get - update - apiGroups: - '' resources: - configmaps verbs: - create - apiGroups: - '' resources: - events verbs: - create - patch --- # Source: ingress-nginx/templates/controller-rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx subjects: - kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx --- # Source: ingress-nginx/templates/controller-service-webhook.yaml apiVersion: v1 kind: Service metadata: labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller-admission namespace: ingress-nginx spec: type: ClusterIP ports: - name: https-webhook port: 443 targetPort: webhook selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller --- # Source: ingress-nginx/templates/controller-service.yaml apiVersion: v1 kind: Service metadata: annotations: labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: type: NodePort ports: - name: http port: 80 protocol: TCP targetPort: http - name: https port: 443 protocol: TCP targetPort: https selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller --- # Source: ingress-nginx/templates/controller-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller revisionHistoryLimit: 10 minReadySeconds: 0 template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller spec: dnsPolicy: ClusterFirst containers: - name: controller image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0 imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: - /wait-shutdown args: - /nginx-ingress-controller - --election-id=ingress-controller-leader - --ingress-class=nginx - --configmap=$(POD_NAMESPACE)/ingress-nginx-controller - --validating-webhook=:8443 - --validating-webhook-certificate=/usr/local/certificates/cert - --validating-webhook-key=/usr/local/certificates/key securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE runAsUser: 101 allowPrivilegeEscalation: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: LD_PRELOAD value: /usr/local/lib/libmimalloc.so livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 ports: - name: http containerPort: 80 protocol: TCP - name: https containerPort: 443 protocol: TCP - name: webhook containerPort: 8443 protocol: TCP volumeMounts: - name: webhook-cert mountPath: /usr/local/certificates/ readOnly: true resources: requests: cpu: 100m memory: 90Mi nodeSelector: kubernetes.io/os: linux serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: - name: webhook-cert secret: secretName: ingress-nginx-admission --- # Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml # before changing this value, check the required kubernetes version # https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites apiVersion: admissionregistration.k8s.io/v1 kind: ValidatingWebhookConfiguration metadata: labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook name: ingress-nginx-admission webhooks: - name: validate.nginx.ingress.kubernetes.io matchPolicy: Equivalent rules: - apiGroups: - networking.k8s.io apiVersions: - v1beta1 operations: - CREATE - UPDATE resources: - ingresses failurePolicy: Fail sideEffects: None admissionReviewVersions: - v1 - v1beta1 clientConfig: service: namespace: ingress-nginx name: ingress-nginx-controller-admission path: /networking/v1beta1/ingresses --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook rules: - apiGroups: - admissionregistration.k8s.io resources: - validatingwebhookconfigurations verbs: - get - update --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx rules: - apiGroups: - '' resources: - secrets verbs: - get - create --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: ingress-nginx-admission annotations: helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx-admission subjects: - kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml apiVersion: batch/v1 kind: Job metadata: name: ingress-nginx-admission-create annotations: helm.sh/hook: pre-install,pre-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx spec: template: metadata: name: ingress-nginx-admission-create labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: containers: - name: create image: docker.io/jettech/kube-webhook-certgen:v1.5.1 imagePullPolicy: IfNotPresent args: - create - --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc - --namespace=$(POD_NAMESPACE) - --secret-name=ingress-nginx-admission env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission securityContext: runAsNonRoot: true runAsUser: 2000 --- # Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml apiVersion: batch/v1 kind: Job metadata: name: ingress-nginx-admission-patch annotations: helm.sh/hook: post-install,post-upgrade helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook namespace: ingress-nginx spec: template: metadata: name: ingress-nginx-admission-patch labels: helm.sh/chart: ingress-nginx-3.33.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.47.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: admission-webhook spec: containers: - name: patch image: docker.io/jettech/kube-webhook-certgen:v1.5.1 imagePullPolicy: IfNotPresent args: - patch - --webhook-name=ingress-nginx-admission - --namespace=$(POD_NAMESPACE) - --patch-mutating=false - --secret-name=ingress-nginx-admission - --patch-failure-policy=Fail env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace restartPolicy: OnFailure serviceAccountName: ingress-nginx-admission securityContext: runAsNonRoot: true runAsUser: 20001

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

473

474

475

476

477

478

479

480

481

482

483

484

485

486

487

488

489

490

491

492

493

494

495

496

497

498

499

500

501

502

503

504

505

506

507

508

509

510

511

512

513

514

515

516

517

518

519

520

521

522

523

524

525

526

527

528

529

530

531

532

533

534

535

536

537

538

539

540

541

542

543

544

545

546

547

548

549

550

551

552

553

554

555

556

557

558

559

560

561

562

563

564

565

566

567

568

569

570

571

572

573

574

575

576

577

578

579

580

581

582

583

584

585

586

587

588

589

590

591

592

593

594

595

596

597

598

599

600

601

602

603

604

605

606

607

608

609

610

611

612

613

614

615

616

617

618

619

620

621

622

623

624

625

626

627

628

629

630

631

632

633

634

635

636

637

638

639

640

641

642

643

644

645

646

647

648

649

650

651

652

# 5.2 使用

官网地址:https://kubernetes.github.io/ingress-nginx/

demo

a b c的测试环境apiVersion: apps/v1 kind: Deployment metadata: name: hello-server spec: replicas: 2 selector: matchLabels: app: hello-server template: metadata: labels: app: hello-server spec: containers: - name: hello-server image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/hello-server ports: - containerPort: 9000 --- apiVersion: apps/v1 kind: Deployment metadata: labels: app: nginx-demo name: nginx-demo spec: replicas: 2 selector: matchLabels: app: nginx-demo template: metadata: labels: app: nginx-demo spec: containers: - image: nginx name: nginx --- apiVersion: v1 kind: Service metadata: labels: app: nginx-demo name: nginx-demo spec: selector: app: nginx-demo ports: - port: 8000 protocol: TCP targetPort: 80 --- apiVersion: v1 kind: Service metadata: labels: app: hello-server name: hello-server spec: selector: app: hello-server ports: - port: 8000 protocol: TCP targetPort: 90001

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

# a. 域名访问

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.atguigu.com"

http:

paths:

- pathType: Prefix # 前缀模式

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/nginx" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

- 本机配置域名映射

- 访问

- http://xxx:30948/

- http://xxx:30948/nginx /nginx 路径访问api时会被携带

# b. 路径重写

处理ingress请求路径, 保证访问到service中的路径

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2 # 路径重写

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/nginx(/|$)(.*)" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404, ingress 访问service时会去掉nginx, 匹配(.*)内容

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀 nginx

port:

number: 8000

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

# c. 流量限制

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-limit-rate

annotations:

nginx.ingress.kubernetes.io/limit-rps: "1" # 限流, 每秒1个

spec:

ingressClassName: nginx

rules:

- host: "haha.atguigu.com"

http:

paths:

- pathType: Exact # 精确模式

path: "/"

backend:

service:

name: nginx-demo

port:

number: 8000

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19